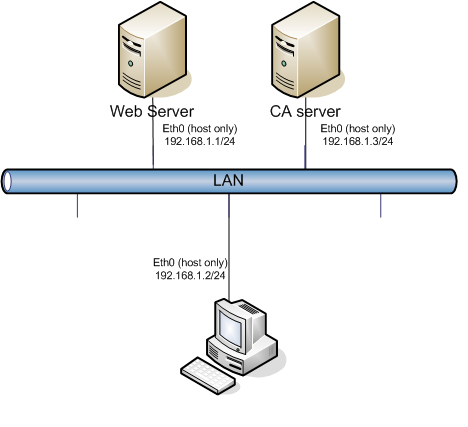

I. Mô hình

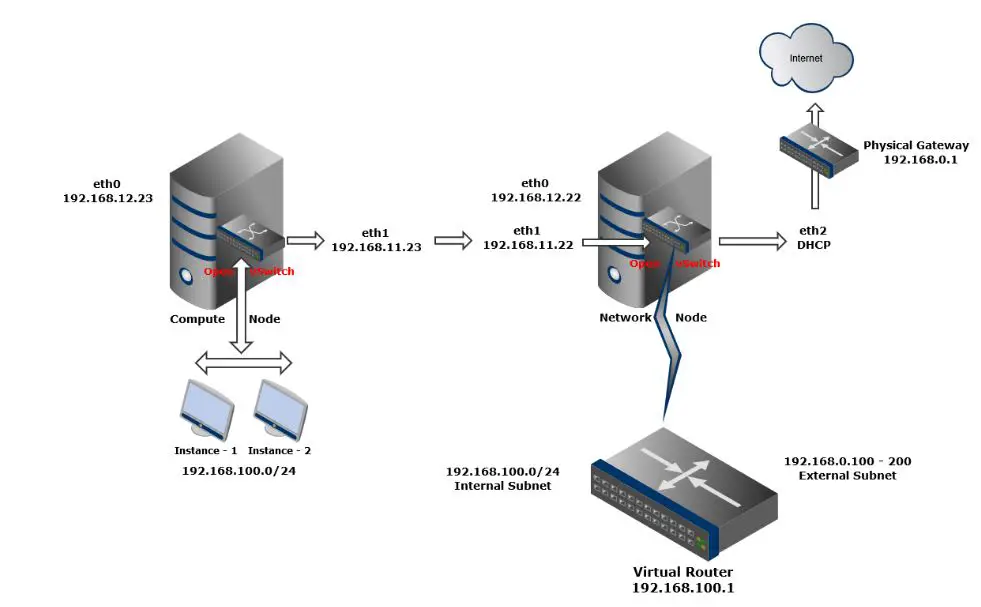

Hướng dẫn chuẩn bị hệ thống như hình vẽ

1. Cấu hình card mang ở chế độ host-only

2. Cấu hình địa chỉ Ip như hình vẽ và kiểm tra kết nối

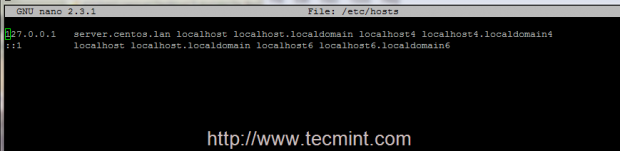

3. Cấu hình DNS với bản sau

|

Server

|

Server Name

|

IP

|

|

CA server

|

ca.lablinux.vn

|

192.168.1.3/24

|

|

Web Server

|

web.lablinux.vn

|

192.168.1.2/24

|

|

client

|

client.lablinux.vn

|

192.168.1.1/24

|

4. Kiểm tra cấu hình

[root@client tmp]# ping ca.lablinux.vn

[root@client tmp]# ping web.lablinux.vn

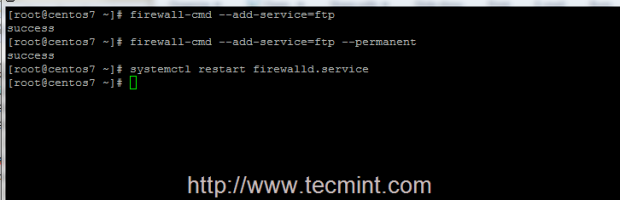

Khởi tạo Certificate Authorization Server

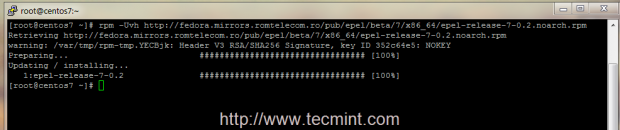

5. Kiểm tra bộ cài

[root@ca tmp]# rpm –q openssl

6. Tạo thư mục thử nghiệm

[root@ca tmp]# mkdir -m 0755 /etc/pki

7. Tạo thư mục để lưu CA

[root@ca tmp]# mkdir -m 0755 /etc/pki/myCA /etc/pki/myCA/private /etc/pki/myCA/certs /etc/pki/myCA/newcerts /etc/pki/myCA/crl

[root@test myCA]# touch index.txt

[root@test myCA]# echo '01' > serial

8. Tạo file cấu hình:

[root@ca pki]#cd /etc/pki/myCA

[root@ca myCA]#vi testssl.conf

[ ca ]

default_ca = CA_default # The default ca section

[ CA_default ]

dir = ./ # top dir

certs = $dir/certs

crl_dir = $dir/crl

database = $dir/index.txt # index file.

new_certs_dir = $dir/newcerts # new certs dir

certificate = $dir/certs/ca.crt # The CA cert

serial = $dir/serial # serial no file

private_key = $dir/private/cakey.pem# CA private key

RANDFILE = $dir/private/.rand # random number file

crl_dir = $dir/crl

default_days = 365 # how long to certify for

default_crl_days= 30 # how long before next CRL

default_md = md5 # md to use

policy = policy_any # default policy

email_in_dn = no # Don’t add the email into cert DN

name_opt = ca_default # Subject name display option

cert_opt = ca_default # Certificate display option

copy_extensions = none # Don’t copy extensions from request

[ policy_any ]

countryName = supplied

stateOrProvinceName = optional

organizationName = optional

organizationalUnitName = optional

commonName = supplied

emailAddress = optional

9. Tạo một certificate cho bản thân mình

[root@ca myCA]# cd /etc/pki/myCA

[root@ca myCA]# openssl req -new -x509 -keyout private/ca.key -out certs/ca.crt -days 1825

10. Phân quyền để bảo mật khóa private

[root@ca tmp]# chmod 0400 /etc/pki/myCA/private/ca.key

Tạo một certificate request từ Web Server

1. Kiểm tra bộ cài

[root@ web tmp]#rpm –q openssl

2. Tạo thư mục thử nghiệm và không sử dụng mục mặc định /etc/pki/

[root@web tmp]# mkdir -m 0755 /etc/pki

3. Tạo thư mục để lưu CA

[root@ web tmp]#mkdir -m 0755 /etc/pki/myCA /etc/pki/myCA/private /etc/pki/myCA/certs /etc/pki/myCA/newcerts /etc/pki/myCA/crl

4. Tạo một certificate request:

[root@web myCA]#cd /etc/pki/myCA

[root@ web myCA]#openssl req -new -nodes -keyout private/server.key -out server.csr -days 365

Chú ý: Common Name (CN) là tên dịch vụ của bạn

5. Giới hạn quyền truy cập file private

[root@web myCA]#chown root.apache /etc/pki /myCA/private/server.key

[root@webr myCA]#chmod 0440 /etc/pki/myCA/private/server.key

6. Gửi chứng chỉ tới CA server

Cấp phát chứng chỉ cho Web Server

1. Chấp nhận một chứng chỉ

[root@ca ~]# cd /etc/pki/myCA/

[root@ca myCA]# openssl ca –config testssl.conf -out certs/server.crt -infiles server.csr

2. Xóa certificate request

[root@ca myCA]# rm -f /etc/pki/myCA/server.csr

3. Kiểm tra Certificate

[root@ca myCA]# openssl x509 -subject -issuer -enddate -noout -in /etc/pki /myCA/certs/server.crt

Hoặc

[root@ca myCA]# openssl x509 -in certs/server.crt -noout -text

4. Kiểm tra chứng thực với chứng thực máy chủ CA

[root@ca myCA]# openssl verify -purpose sslserver -CAfile /etc/pki/myCA/certs/ca.crt /etc/pki/myCA/certs/server.crt

5. Tạo mới một CRL (Certificate Revokation List):

# openssl ca -config testssl.conf -gencrl -out crl/myca.crl

6. Gửi lại chứng chỉ cho máy chủ Web

[root@ca myCA]# scp /etc/pki/myCA/certs/server.crt root@webserver:/etc/pki/myCA

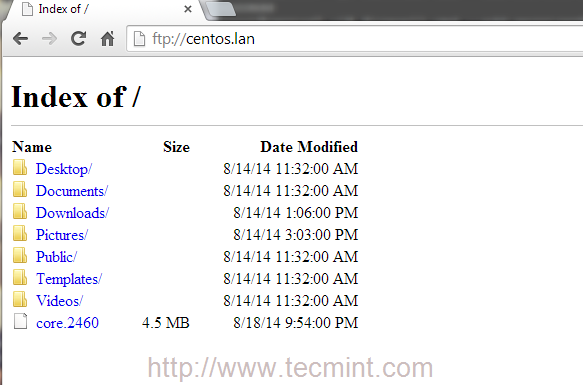

Cấu hình Web server sử dụng Certificate

1. Copy certificate và key tới vị trí để Apache có thể nhận biết

[root@server myCA]#mv /etc/httpd/conf/ssl.crt/server.crt /etc/httpd/conf/ssl.crt/server1.crt

[root@server myCA]#cp /etc/pki/myCA/server.crt /etc/httpd/conf/ssl.crt/

[root@server myCA]#mv /etc/httpd/conf/ssl.key/server.key /etc/httpd/conf/ssl.key/server1.key

[root@server myCA]#cp /etc/pki/myCA/private/server.key /etc/httpd/conf/ssl.key/server.key

2. Tạo trang web thử nghiệm

[root@server myCA]# cd /var/www/html/

[root@server html]# vi index.html

<html>

<header>

</header>

<body>

This is a test

</body>

</html>

3. Chạy máy chủ web

[root@server html]# chkconfig httpd on

[root@server html]#service httpd start

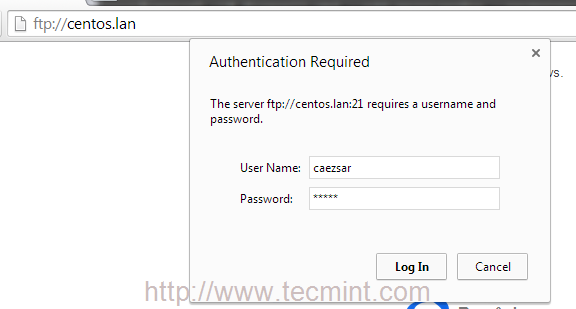

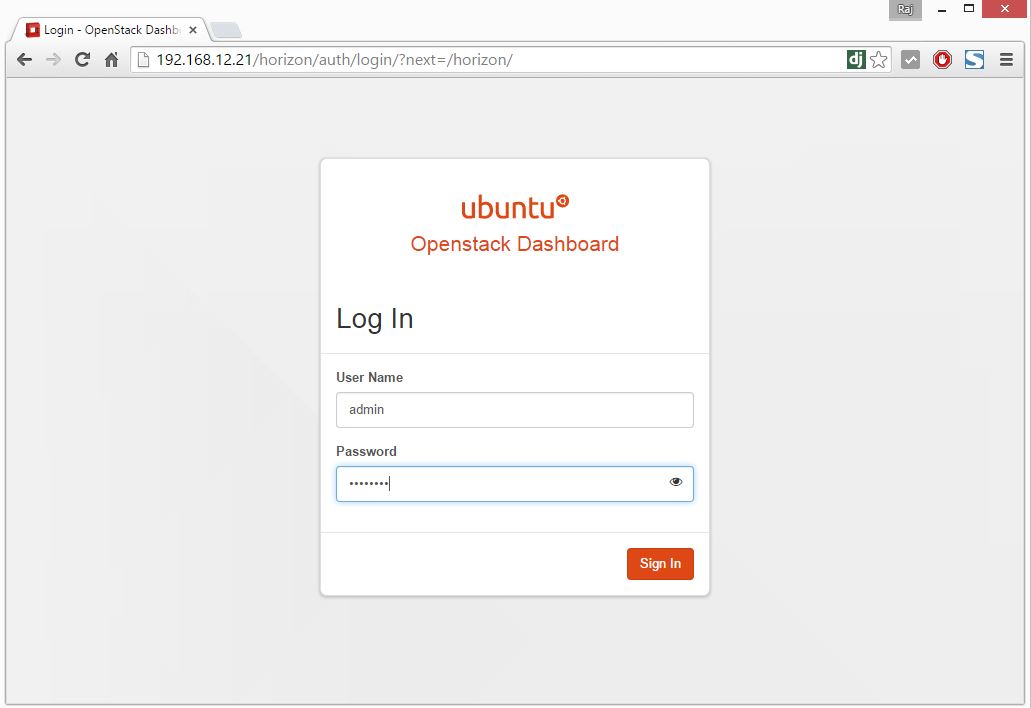

Cấu hình client sử dụng Certificate

Trong máy client chạy Internet Explorer tới địa chỉ: http://web.lablinux.vn

Trong máy client chạy Internet Explorer tới địa chỉ: https://web.lablinux.vn

Import Certificate của CA

a. Chọn Option