Apache Hadoop is a an open-source software framework written in Java for distributed storage and distributed process, it handles very large size of data sets by distributing it across computer clusters. Rather than rely on hardware high availability, hadoop modules are designed to detect and handle the failure at application layer, so gives you high-available serveice.

Hodoop framework consists of following modules,

- Hadoop Common – It contains common set of libraries and utilities that support other Hadoop modules

- Hadoop Distributed File System (HDFS) – is a java based distributed file-system that stores data, providing very high-throughput to the application.

- Hadoop YARN – It manages resources on compute clusters and using them for scheduling user’s applications.

- Hadoop MapReduce – is a framework for large-scale data processing.

This guide will help you to get apache hadoop installed on Ubuntu 14.10 / CentOS 7.

Prerequisites:

Since hadoop is based on java, make sure you have java jdk installed on the system. Incase your machine don’t have a java, follow the below steps. You may also skip this if you have it already installed.

Download oracle java by using the following command, on assumption of 64 bit operating system.

# wget --no-check-certificate --no-cookies --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u5-b13/jdk-8u5-linux-x64.tar.gz

Extract the downloaded archive, move it to /usr.

# tar -zxvf jdk-8u5-linux-x64.tar.gz

# mv jdk1.8.0_05/ /usr/

Create Hadoop user:

It is recommended to create a normal user to configure apache hadoop, create a user using following command.

# useradd -m -d /home/hadoop hadoop # passwd hadoop

Once you created a user, configure a passwordless ssh to local system. Create a ssh key using following command

# su - hadoop $ ssh-keygen $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

verify the passwordless communication to local system, if you are doing ssh for the first tim, type “yes” to add RSA keys to known hosts.

$ ssh 127.0.0.1

Download Hadoop:

You can visit apache hadoop page to download the latest hadoop package, or simple issue the following command in terminal to download Hadoop 2.6.0.

$ wget http://apache.bytenet.in/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz $ tar -zxvf hadoop-2.6.0.tar.gz $ mv hadoop-2.6.0 hadoop

Install apache Hadoop:

Hadoop supports three modes of clusters

- Local (Standalone) Mode – It runs as single java process.

- Pseudo-Distributed Mode – Each hadoop daemon runs in a separate process.

- Fully Distributed Mode – Actual multinode cluster ranging from few nodes to extremely large cluster.

Setup environmental variables:

Here we will be configuring hadoop in Pseudo-Distributed mode, configure environmental variable in ~/.bashrc file.

$ vi ~/.bashrc export JAVA_HOME=/opt/jdk1.8.0_05/ export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

Apply environmental variables to current running session.

$ source ~/.bashrc

Modify Configuartion files:

Edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh and set JAVA_HOME environment variable.

export JAVA_HOME=/usr/jdk1.8.0_05/

Hadoop has many configuration files depend on the cluster modes, since we are to set up Pseudo-Distributed cluster, edit the following files.

$ cd $HADOOP_HOME/etc/hadoop

Edit core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> </property> </configuration>

Edit hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>file:///home/hadoop/hadoopdata/hdfs/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>file:///home/hadoop/hadoopdata/hdfs/datanode</value> </property> </configuration>

Edit mapred-site.xml

$ cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

Edit yarn-site.xml

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

Now format namenode using following command, do not forget to check the storage directory.

$ hdfs namenode -format

Start NameNode daemon and DataNode daemon by using the scripts provided by hadoop, make sure you are in sbin directory of hadoop.

$ cd $HADOOP_HOME/sbin/

$ start-dfs.sh

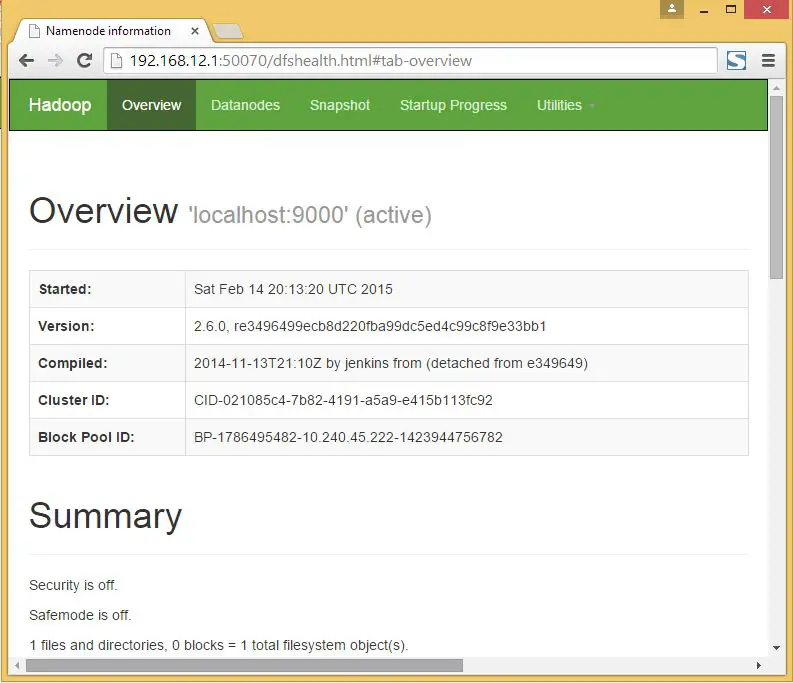

Browse the web interface for the NameNode; by default it is available at: http://your-ip-address:50070/

Start ResourceManager daemon and NodeManager daemon:

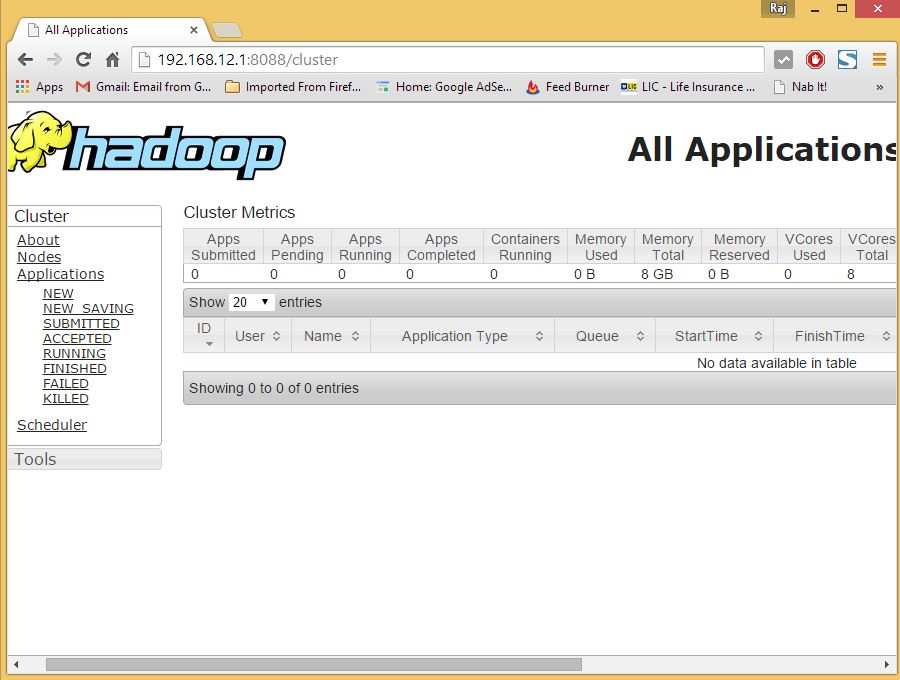

$ start-yarn.sh

Browse the web interface for the ResourceManager; by default it is available at: http://your-ip-address:8088/

Testing Hadoop single node cluster:

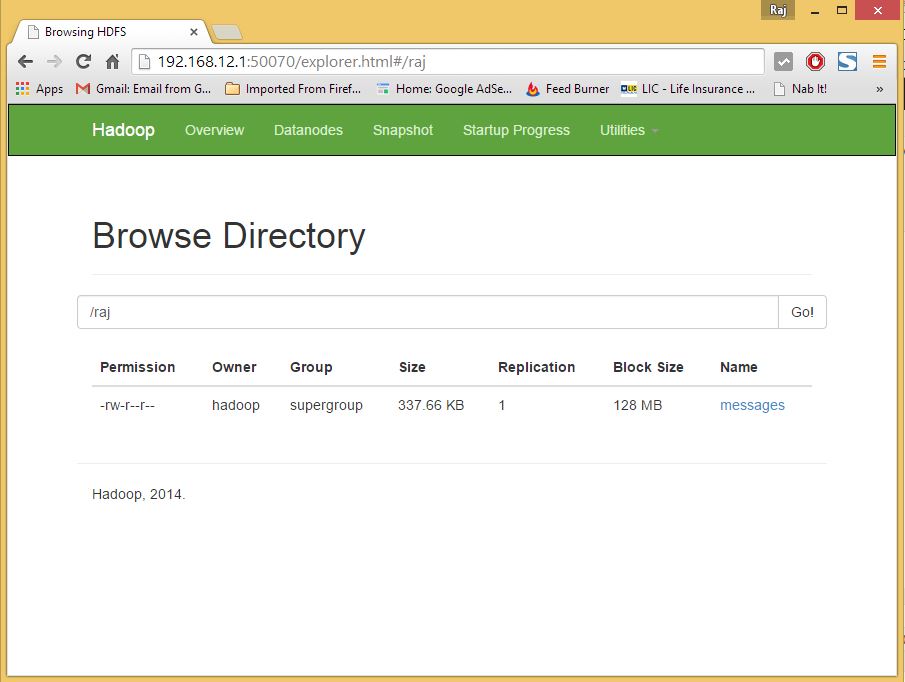

Before carryiging out the upload, lets create a directory at HDFS in order to upload a files.

$ hdfs dfs -mkdir /raj

Lets upload messages file into HDFS directory called “raj”

$ hdfs dfs -put /var/log/messages /raj

Uploaded files can be viewed by visiting the following url. http://your-ip-address:50070/explorer.html#/raj

Copy the files from HDFS to your local file systems.

$ hdfs dfs -get /raj /tmp/

You can delete the files and directories using the following commands.

hdfs dfs -rm /raj/messages hdfs dfs -r -f /raj

That’s All!, you have successfully configured single node hadoop cluster.